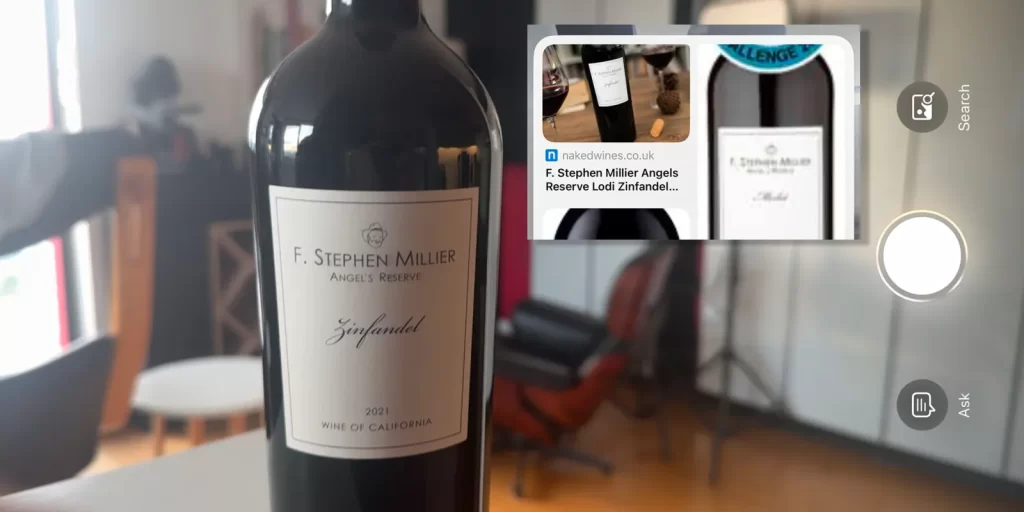

I know, it’s just a developer beta, and if anyone from Apple asks then my Visual Intelligence first impressions are purely offered in the context of its future potential. But I have to say that trying it leaves me excited for that future.

The actual Apple Intelligence part is currently relatively limited. In most cases, you get a choice between asking ChatGPT to describe to you what you are seeing and a Google search …

From what we can tell, Apple Intelligence performs a few functions directly:

But for object recognition, the feature currently appears to exclusively use ChatGPT and Google. In my limited experience so far, Google is by far the most impressive.

I tend to research most of my non-trivial purchases rather extensively before pressing the button. I read reviews, ask advice from friends, and generally interrogate Google to the nth degree.

So if I see something in use somewhere and want to check it out, I need specifics: telling me that I’m looking at a compact bean-to-cup coffee machine or similar wouldn’t really cut it.

Your trusted partner in comprehensive cybersecurity solutions for small and medium-sized businesses. From risk assessments to proactive defenses, we provide scalable, affordable security services to keep your business protected in a constantly evolving digital landscape.

© Cybersecmax. All Rights Reserved by Cybersecmax